The widget click event (called widget_click) is triggered every time a recommendation from a tracked recommendation set is clicked. Developers can get a quick summary at the Widget Events page. This page is more for people interested in data science and analysis.

1. Conditions for triggering

A widget click is triggered every time a user clicks on a recommendation in a recommendation widget being tracked by LiftIgniter. This could include recommendation widgets powered by LiftIgniter, as well as base slices of such widgets (that are not powered by LiftIgniter, but are still tracked to provide an A/B test comparison). The event is triggered both on a left click (called a "click" in Javascript) or a right click (called a "contextmenu" in Javascript, since it opens up the context menu).

The widget_click event can be triggered even in cases where the user does not actually visit the clicked page. This is obvious for right clicks: some people right-click in order to open the link in a new tab or window, but others may right-click to copy the link URL or use another context menu option. It could also be that the user intends to open the page but the page fails to load or the user bounces too quickly. We will still count these as clicks.

2. Fields in widget click events

Widget click events have the following special fields that play a key role in performance tracking, analytics, and machine learning:

- Visible items (called

visibleItems, and compactified in our JS asvi): This is a list of the recommended items shown in the widget. The list of visible items helps our machine learning systems keep track of how often specific items are getting shown, so that we can calculate item CTRs and our system can better gauge the performance of specific items. - Click URL (called

clickUrl): This is the URL of the item clicked. It is key to our machine learning, as it tells us what the user selected! It is also important for other analytics purposes, including deduplication. - Widget name (called

widgetName, and compactified in our JS asw): This is the name of the widget. For instance, you might have difference widget names likehome-page-recommendationsandarticle-recommendations. - Source (called

source): This gives information on the algorithm used as the source of recommendations. We useLIfor LiftIgniter's recommendations (in our Analytics, this shows up as "LiftIgniter") andbasefor the baseline recommendations. You can use other names.

3. Comparing widget click event values in an A/B test

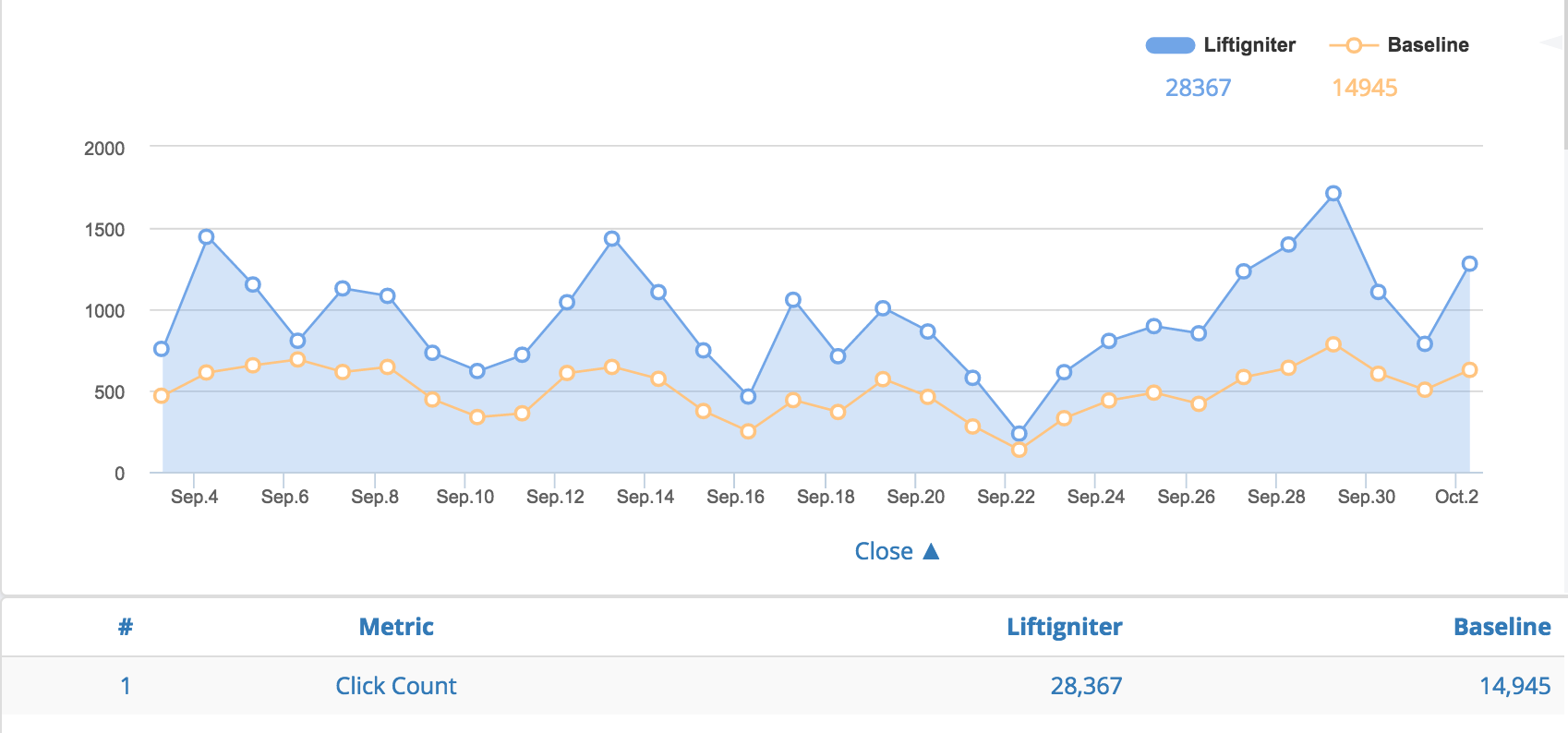

Widget click values in a fair 50/50 A/B test. LiftIgniter's slice (blue, filled solid below) performs about twice as well as the baseline slice (orange). Traffic volumes vary a lot by day but you see that LiftIgniter outperforms the baseline every day.

LiftIgniter does A/B testing by user. This means that when we do a 50/50 A/B test, 50% of users see LiftIgniter's recommendations all the time, and the other 50% see the baseline recommendations all the time.

In such an A/B test, a direct comparison of the number of widget_click events in LiftIgniter's slice and the baseline is a reasonably fair measure of relative performance. Note that if the test is not 50/50, this will not be a fair comparison. Even if it is 50/50, the split may not be perfectly even, and we generally recommend using a CTR comparison for a clearer idea. However, widget click comparisons can sometimes capture various direct and indirect effects on top of the CTR differences. For more on direct and indirect effects that affect A/B test result interpretability, see here.

4. Derived metrics

For a more complete list of all the metrics and their relationship, see Metrics summary.

| Derived metric | Numerator | Denominator | Supported by LiftIgniter? |

|---|---|---|---|

| Click-Through Rate (CTR) | Widget Click | Widget Shown | Yes, with Tracking Widgets implemented |

| Visible Click-Through Rate (VCTR) | Widget Click | Widget Visible | Yes, with Tracking Widgets implemented |

| Conversion-to-Click Ratio | Conversion (through click) | Widget Click | Yes, with Tracking Widgets and Tracking Conversion implemented. |